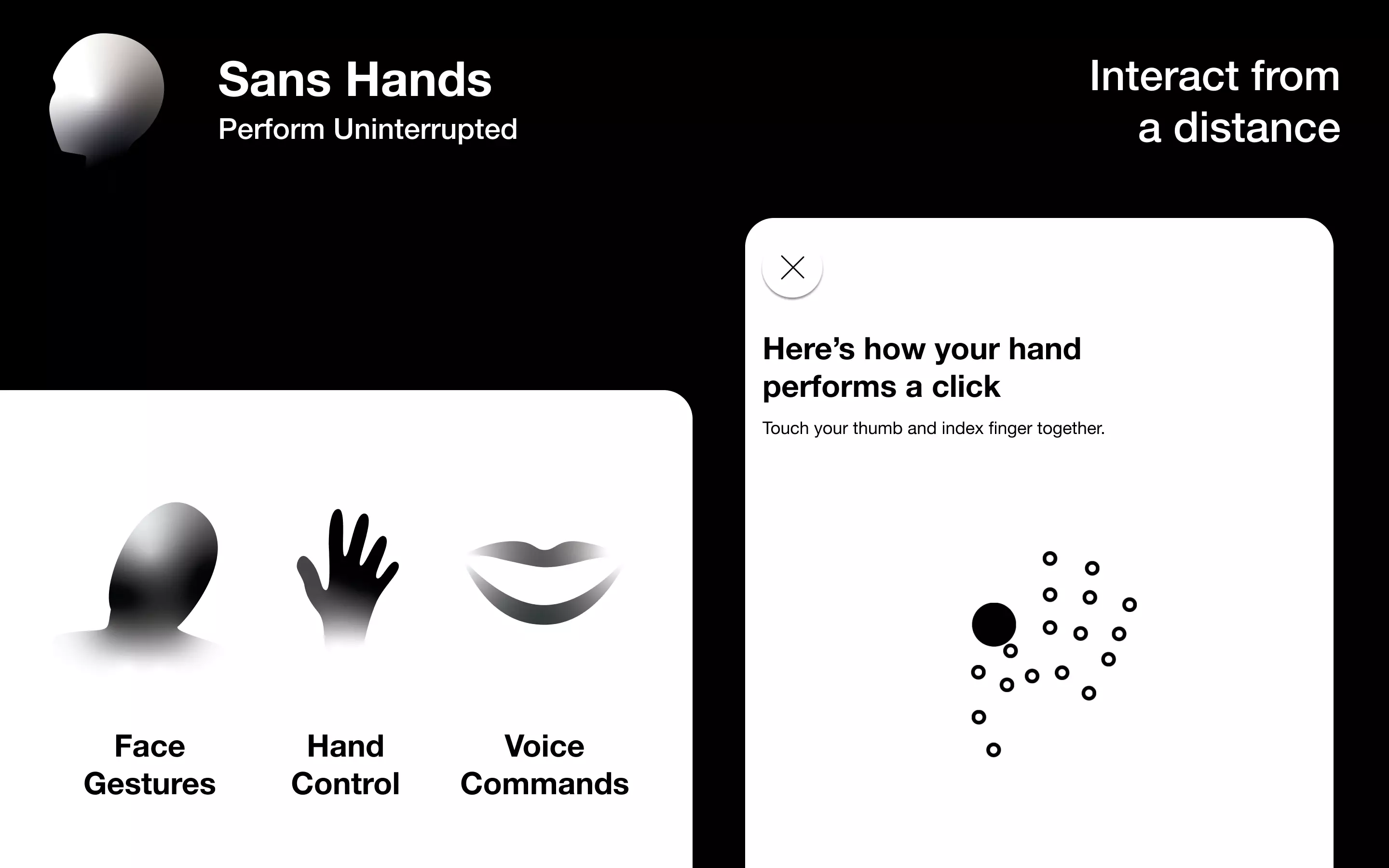

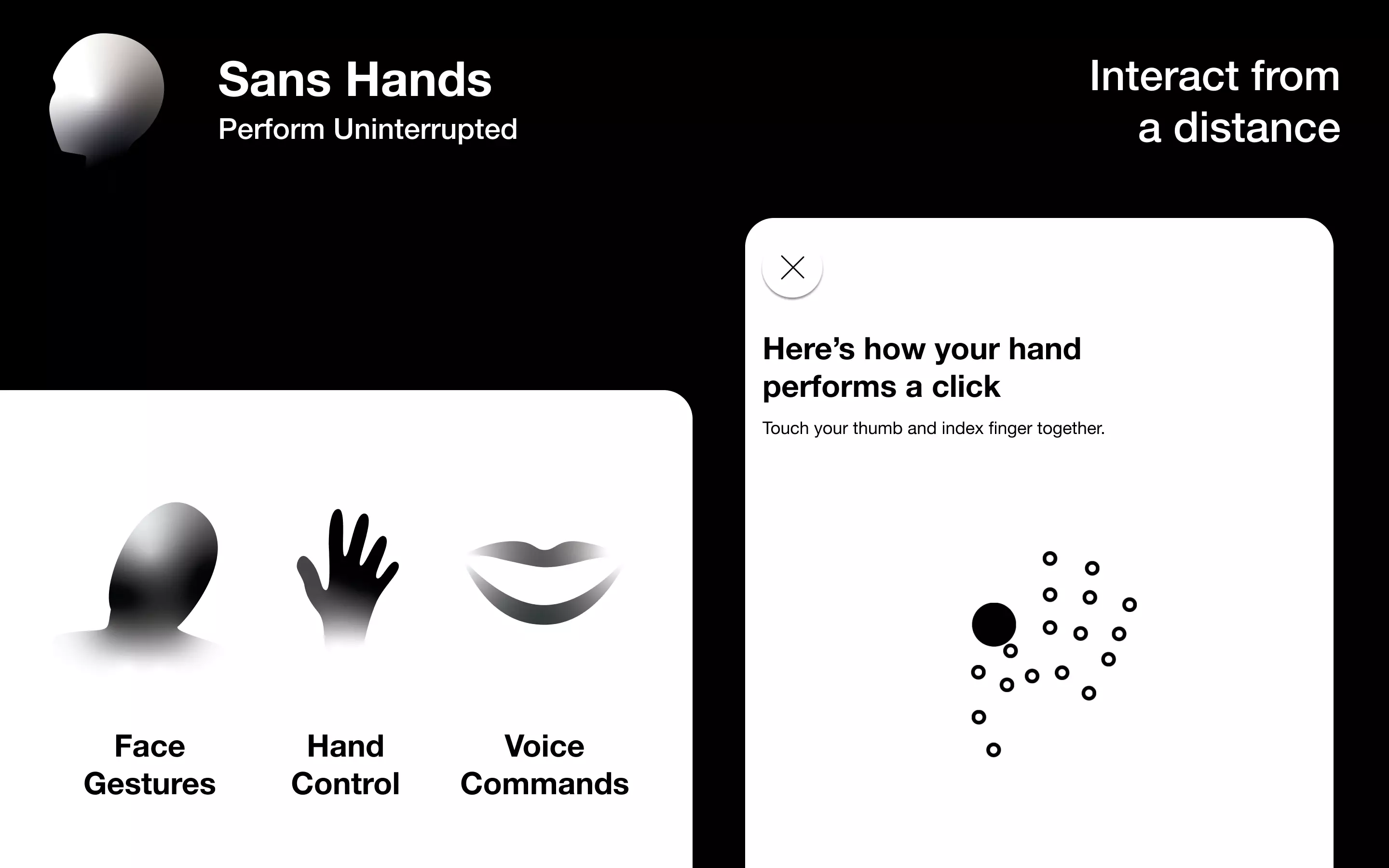

Sans Hands Interact From a Distance

Interact from a distance with a computer, iphone or ipad using your hand of your face.

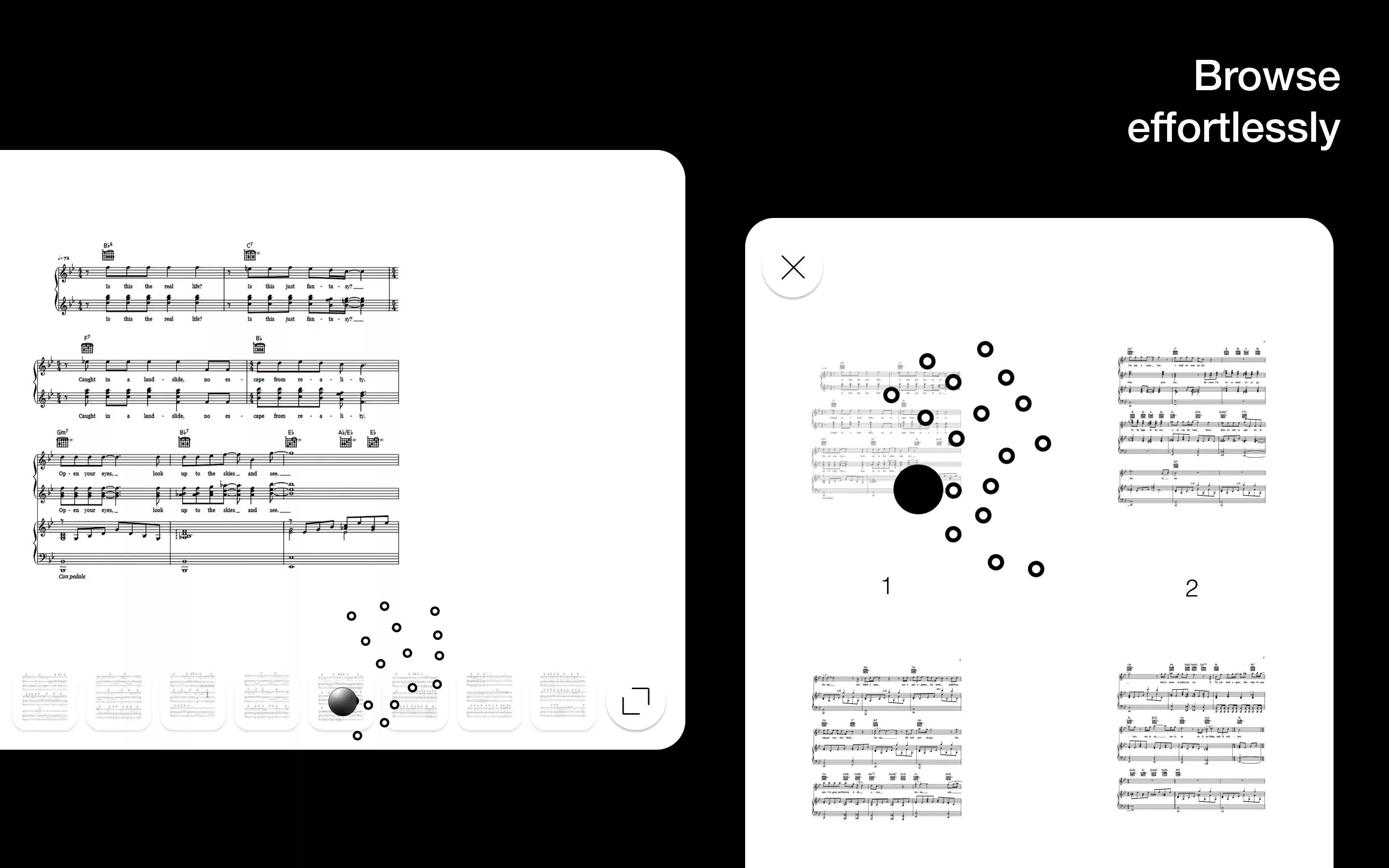

We are proud to announce that we have released our mixed reality, hand control technology that allows you to read and browse effortlessly from a distance. Available via the Apple App Store on iPhone, iPad and MacOS. Requires iOS 14+ / Mac OS 11+.

Special thanks to Ioannis Dimitroulas, Antonio Nicolás de la Hera Martinez (M.D. & Philosophy Doctor) and María del Mar Gómez Castillo for all the help along the way.

The application was made possible thanks to the education in Mathematics provided by The British School in the Netherlands; the Master of Engineering in Mechanical Engineering from Imperial College London and the Master of Fine Arts in Products of Design from the School of Visual Arts.

Could I just flick my hand?

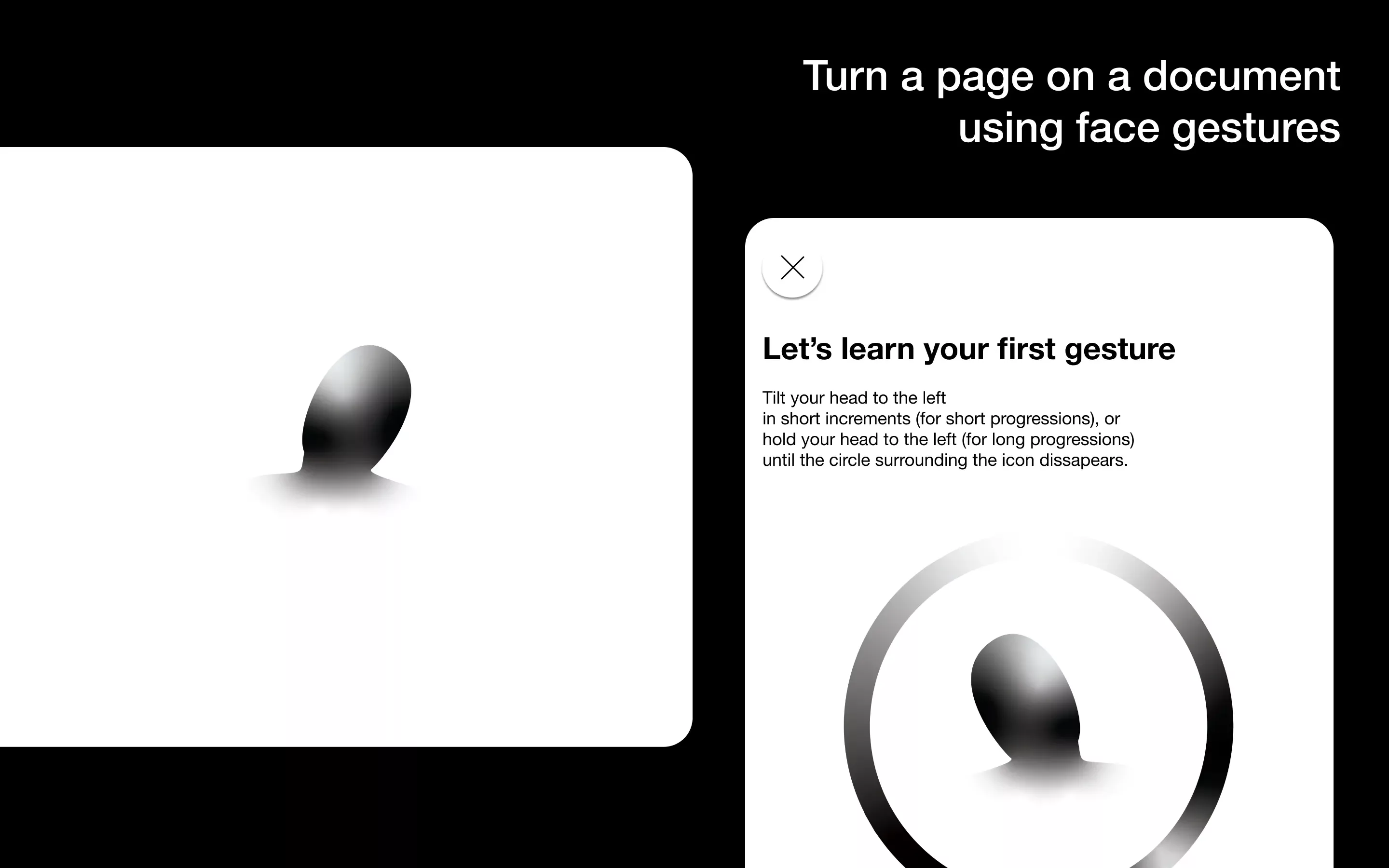

In August 2020 our founder, Oscar de la Hera Gomez, was found in Croatia resolving matters close to the heart. Around this time user research into Sans Hands had been carried out by Ioannis Dimitroulas, which brought insights into the need for a face gestural interface that would allow people to read from a distance. The insights into uses ranged from reading in bed, turning a page on a recipe book whilst cooking or turning a music score whilst playing an instrument.

Encouraged by this progress and with a desire to learn more Oscar decided to carry out an In-Depth Interview (IDI) with a trusted critic, Ed Stoner, Founder of New Dynamic, who is known for his excellence in strategy and insights.

During this IDI, Oscar and Ed discussed the potential of the existing face gestural interface as well as where the innovation could be taken. At this point, Ed mentioned that he would have no interest in the face gestures but would definitely use it on a Mac to read if he could use his hand, by forming the gesture below and flicking it to a side, to turn a page.

What if I could flick my hand to one side instead ?

He said that he thought this would revolutionize the reading experience and coincided with Apple’s Vision Framework update that allowed developers to detect hand pose.

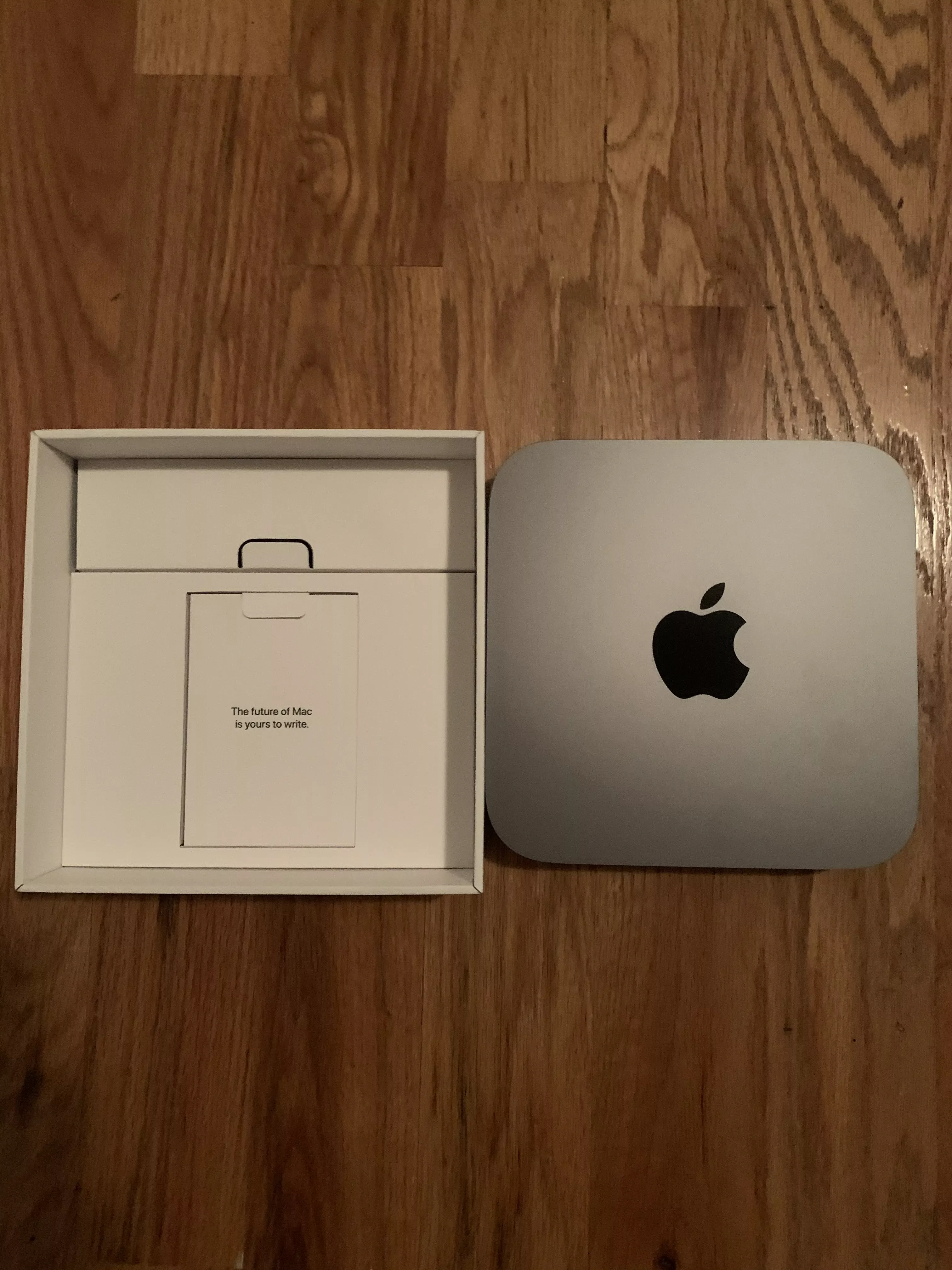

The Future of Mac is Yours to Write

As he was preparing to return to New York, Oscar received an email from Apple Inc.

delasign had been selected for the Universal Quick Start Program which granted us access to the Developer Transition Kit (DTK), empowering Oscar to be one of the first to hold an Apple Silicon Computer. To Oscars delight, the computer came a note inviting him to Write the Future.

We were honored to be one of the first to develop on Apple Silicon

Upon landing in New York, Oscar only had one thing on his mind. To confirm that Apple’s Vision Framework worked on the Mac. Making use of Apple’s Open Source hand pose detection project, Oscar put together a project to learn about how the technology worked and confirmed that it worked on iPhone and iPad. However, when he tested it on an Intel Macintosh running Catalina, it failed. Weeks later when recieving the DTK, to Oscar’s relief, the hand pose detection worked flawlessly demonstrating that it would work on Silicon, and on release, across Mac’s running Big Sur.

From Machine Learning Algorithms to Hand Control.

To confirm whether Ed’s vision could come true, Oscar began to visually test the system. He discovered that:

- Apple’s hand pose detection did not work well at all angles.

- Apple’s Vision Framework made it difficult to determine gestures because there was no way to determine hand orientation.

- Apple’s Vision Framework provided joints as in points magnitudes across the screen with the center of the screen being shown as (0.5, 0.5), top left as (0.0, 0.0) and bottom right as (1.0, 1.0).

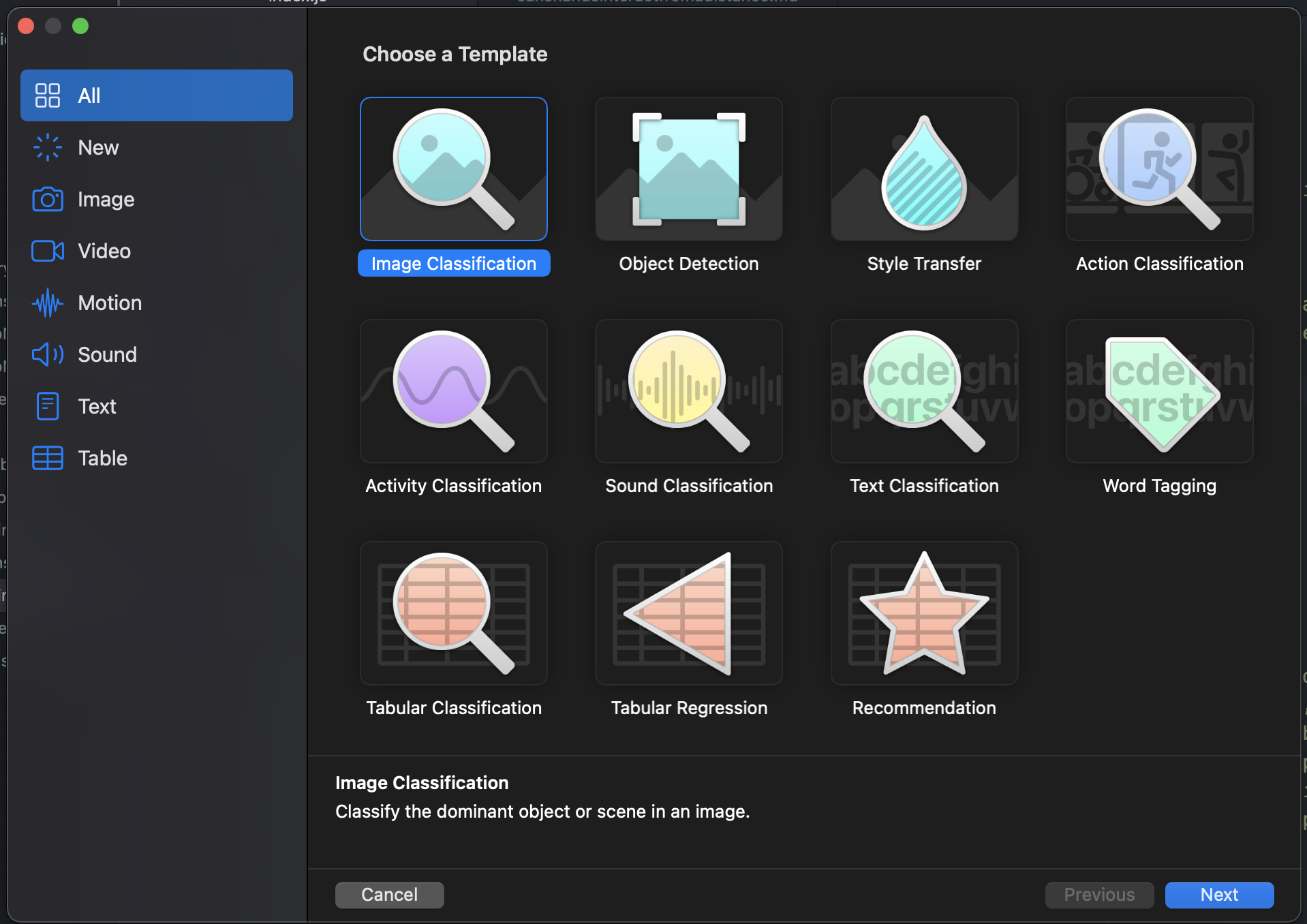

Perplexed and discouraged, Oscar pondered on how he could make it happen. Perhaps using Apple’s new Create ML feature, Oscar could create a Machine Learning Algorithm that determined when the user carried out a specific gesture.

Here are the classifiers Create ML offers.

Collecting 40+ video’s and implementing them into Apple’s Create ML, Oscar put together an algorithm composed of three options - None, Flick Left, Flick Right. Oscar then used WWDC’s session on Create ML to make the algorithm work on iPad and the Silicon Macintosh. This is where he discovered that:

- Apple’s CoreML only registered motion, demonstrating the need for an algorithm to transform static motion into registerable frames.

- That Apple’s 2020 Action Classifier used body pose and not hand pose, suggesting that the Machine Learning algorithm would not work as a motion of the wrist would trigger it. This was problematic as we were looking for a specific pose with a specific motion.

Going back to step one. Oscar stared at the project he had put together to test Apple’s Hand Pose Detection across devices. A single question lay in his mind: How could I make this perform a click and a drag?

Projection & Stabilization

Upon recreating Sans Hands in iOS from the Flutter prototype, Oscar integrated the hand pose detection algorithm into the interface & added basic functionality that allowed for a click via a cursor.

Playing with his new toy, he noticed that it was difficult for the hand to touch anything found on the edges of the interface, with the hand pose detection creating crooked hands. What if we projected the hand from the center to make it visibly look correct?

This change made the hand move well across the screen, allowing Oscar and his group of testers at the Neuehouse to click. However, this projection accentuated the instability of the algorithm, making the hand jitter more as it was projected across the screen. To fix this, Oscar applied the knowledge taught by Dr. Mihalo Ristic at Imperial College London’s Mechanical Engineering, Advanced Control course to create an algorithm that stabilized the hand.

This worked fantastically but the visualization was not appealing. This led Oscar to dive deep into the Metal Shading Language and Core Image to visualize the hand in real-time.

Visualization

Diving deep into Metal Shaders, Oscar combined the True Depth Camera, Apple’s Vision and Core Image framework with a custom metal shading algorithm to produce the following visualizer. However, due to its limited range and device range, Oscar decided to save this for the future, as a paid feature.

The Normalized Hand

The final challenge involved creating a reliable hand pose detection algorithm that regardless of scale, position and orientation, would allow an Apple Device to determine a click, a drag or any other gesture.

Inspired by Einstein’s thought experiments, Oscar began to write down how this would work. A pinned point; algebra to position the points; a vector to scale the hand and a rotation element - and then use that hand to determine the events. Design by Trigonometry. All elements taught by Begoña Alfaro, Dave McGee, David Napper and Tadhg Naughton during his time at the British School in the Netherlands.

For motion, the motion of joints? Or the cursor? And how would that work? What about projection ? These questions made Oscar's mind spin and made him sick. It was time to put thought to code and to figure it out step by step. 24 hours later, the Normalized Hand was complete.

Perfecting the Touch with Users

After implementing the normalized hand and backwards engineering a human touch into the touchless domain, we were ready to test it on users. Applying the lessons we had learned at the MFA Products of Design we began to research interactions. Placing the tutorial in-front of 20 testers at the Neuehouse demonstrated two things:

- User’s hand’s naturally jittered, insinuating that a drag had to be less sensitive to allow for clicks.

- The most important lesson, which was not in the app, was that the palm of the hand had to consistently face the camera in order for it to work well.

Launch & The Future

On March 28th 2021, we launched Sans Hands v2.0 which allows users to interact from a distance on iPhone, iPad and Mac. A moment our founder will never forget as it is revolutionary, first of its kind and demonstrates the potential of these applications. Our next steps involve:

- Implementing the tutorial as an App Clip to give people a taster without the app

- Making use of application insights to gather valuable insights which will be transformed into a subscription model for the app

- Localizing the application to work in English, Spanish, French, Dutch and Greek.

- Creating an Android PoC for the normalized hand.

For any questions please contact inquires@delasign.com